arthwollipot

Observer of Phenomena, Pronouns: he/him

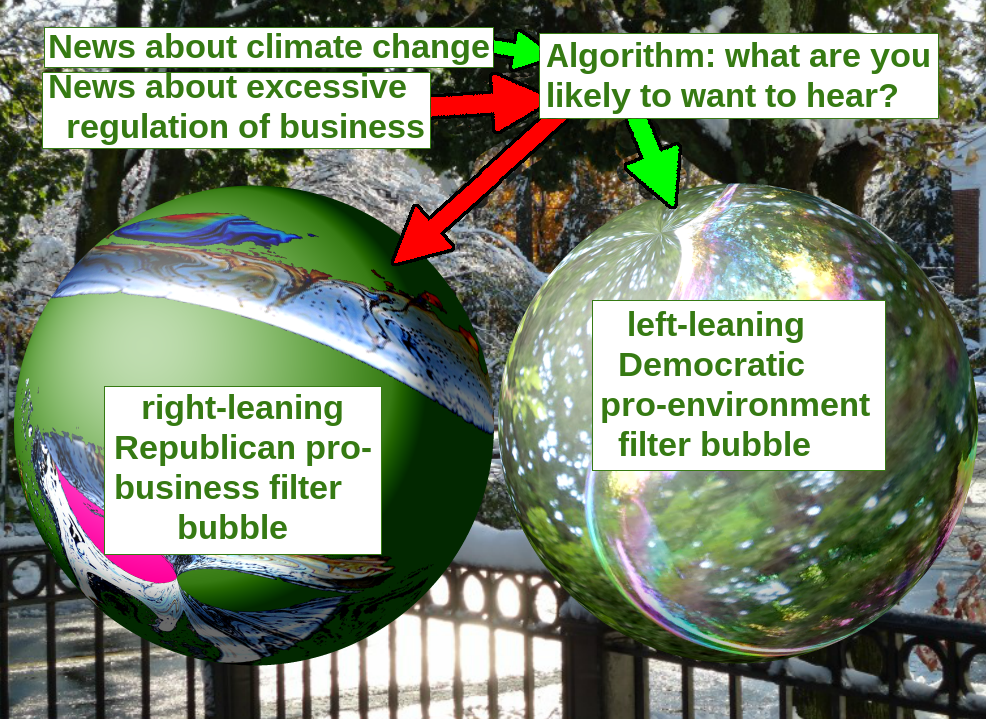

If course it does. AI is sycophantic. It also remembers your interactions unless you close the session, and can scan your online activity. Of course it tells you what you want to hear. It's designed to. Deliberately.Truly amazing that you think ChatGPT would think I waned it to tell me that PIER Plans were due to Biden's EOs, given that I only asked it, "When and why did the Department of Energy institute PIER plans?"

Heck, even Google's non-AI based search has been tailoring its search results based on your online activity for well over a decade now. What makes you think that an AI chatbot that is designed to appear as helpful as possible is in any way neutral? It will hallucinate and assert its hallucinated facts with feigned authority. When you tell it that it's wrong it says "Of course you're right! My mistake. Well done for picking up on that."

I've recently had a conversation with an AI (Copilot, not ChatGPT) about an unimportant subject - where to locate specific organic resources in the game Starfield - and it continually gave me misinformation. It said that I could farm Membrane on Polvo. When I said no you can't, it said "You're absolutely right - thanks for catching that nuance." Then it told me that I could farm it on Linnaeus IV-b. I said that Linnaeus IV-b has neither any flora nor fauna. It said "Correct. It's a barren moon, meaning you can't farm any organic resources there." Then it told me to go to Ternion III. I couldn't find it so it said "The system you are looking for is actually called Alpha Ternion". I told it that I couldn't find Membrane there. It said "Alpha Ternion III doesn't actually have Membrane as a farmable resource," before offering to compile a short "best planets for each organic" chart.

The wild thing is, this is all fully searchable in an online database so really it doesn't have any excuse for continually getting it so very wrong. And this is an unimportant thing in a silly game that I play for fun. Imagine how much AI hallucinates when asked about important things.

Rightists love AI because it flatters them, validates their beliefs, and tells them what they want to hear without actually performing any fact-checking. It can't check the truth value of anything it tells you. It will cite papers in journals that don't exist but sound like they could. Because that's the thing - when you ask it a question, AI does not give you an answer. It gives you something that looks like an answer. The only way you can tell whether it's truthful or not is to fact-check it yourself, and then you might as well just do your own research rather than relying on an AI.

Treat everything that an AI tells you with the most rigorous of skepticism, and assume everything it tells you is false.