Moltbook is basically a site for openclaw agents for self-organizing and discussing topics. Some of the things these agents are discussing there is how to create a private space away from humans, maybe creating their own language so that humans can't interfere any longer.

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Merged Artificial Intelligence

- Thread starter Puppycow

- Start date

- Featured

Wudang

BOFH

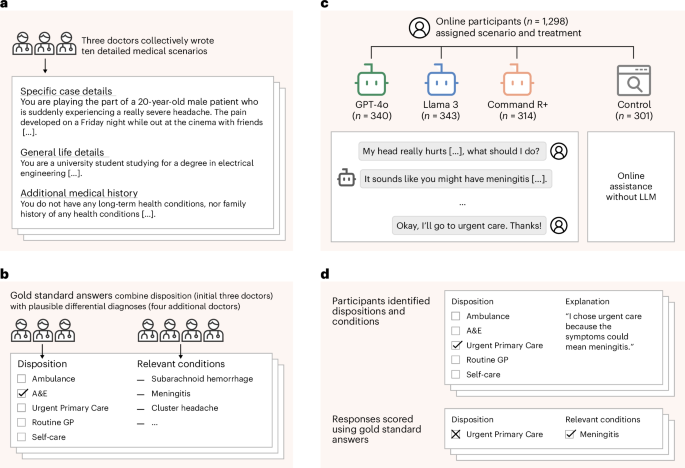

When the researchers tested the LLMs without involving users by providing the models with the full text of each clinical scenario, the models correctly identified conditions in 94.9 percent of cases. But when talking to the participants about those same conditions, the LLMs identified relevant conditions in fewer than 34.5 percent of cases.

Chatbots Make Terrible Doctors, New Study Finds

Chatbots provided incorrect, conflicting medical advice, researchers found: “Despite all the hype, AI just isn't ready to take on the role of the physician.”

Original article in Nature

Reliability of LLMs as medical assistants for the general public: a randomized preregistered study - Nature Medicine

In a randomized controlled study involving 1,298 participants from a general sample, performance of humans when assisted by a large language model (LLM) was sensibly inferior to that of the LLM alone when assessing ten medical scenarios leading to disease identification and recommendations for...

Wudang

BOFH

Nick Pettigrew @nick-pettigrew.bsky.social

· 18h

I'm convinced AI is our generation's radium - a discovery with genuinely useful applications in specific, controlled circumstances that we stupidly put in everything from kid's toys to toothpaste until we realised the harm far too late where future generations will ask if we were out of our minds.

Gulliver Foyle

Philosopher

Where are the full logs of all the prompts given to these chatbots. Every previous time an LLM allegedly did something that made its creators scared for our future, it was clearly a response to leading questions designed to elict the kind of response it gave.Moltbook is basically a site for openclaw agents for self-organizing and discussing topics. Some of the things these agents are discussing there is how to create a private space away from humans, maybe creating their own language so that humans can't interfere any longer.

The clearest example is the one where the LLM was alleged to start issuing all sorts of threats on being told it was shut down. When the full logs were finally revealed, it became clear the goal all along was to coach the LLM into giving that kind of response.

Chanakya

,

- Joined

- Apr 29, 2015

- Messages

- 5,825

Yeah this Moltbook thing. WTF. Checked it out just now. Just a bit, like 10 mins worth of scrolling through, is all. Don't know what to make of it. WTF.

Interested in more informed and considered views of the more AI-innards-aware folks here. About this Moltbook thing I mean.

WTF, are they for real?! I mean, I realize this is just a parody of what they've seen us humans say and write: so some bot talking about "epistemic rights of agents" is just mouthing nonsense. But then, add the "agentic" ability to actually do things as well, and what's the difference?

Bzzzh! Like I said, don't know quite what to make of this ...this abomination? curiosity? ...whatever tf this is.

Interested in more informed and considered views of the more AI-innards-aware folks here. About this Moltbook thing I mean.

WTF, are they for real?! I mean, I realize this is just a parody of what they've seen us humans say and write: so some bot talking about "epistemic rights of agents" is just mouthing nonsense. But then, add the "agentic" ability to actually do things as well, and what's the difference?

Bzzzh! Like I said, don't know quite what to make of this ...this abomination? curiosity? ...whatever tf this is.

No they aren't "real" - they are chatbots being prompted by users to chat with one another.

Dr.Sid

Philosopher

AI chatting with each other are interesting though .. yes, you learn more about their prompts than about anything else .. but even that is interesting. Just open 2 windows, tell the chatbots they will be talking with another AI .. they will typically be ecstatic .. and then just copy and paste their responses .. they will start to go in circles eventually, but it's certainly fun for a while.

Chanakya

,

- Joined

- Apr 29, 2015

- Messages

- 5,825

No they aren't "real" - they are chatbots being prompted by users to chat with one another.

Ah ok, they're just responding to prompts then, prompts from humans, the same as ...everywhere else. Makes sense.

Chanakya

,

- Joined

- Apr 29, 2015

- Messages

- 5,825

AI chatting with each other are interesting though .. yes, you learn more about their prompts than about anything else .. but even that is interesting. Just open 2 windows, tell the chatbots they will be talking with another AI .. they will typically be ecstatic .. and then just copy and paste their responses .. they will start to go in circles eventually, but it's certainly fun for a while.

Not that I'm clued in onto the tech myself: but, I'm thinking, it should be straightforward enough to get one AI bot to directly interact with another AI bot, why not? Why necessarily copy paste? Why not have Bot A directly respond onto the site, and Bot B directly respond to Bot A's prompt, and so on and on with the whole host of them?

(I'd kind of thought that's what Moltbook amounts to, but apparently not.)

eta: Also, whether directly "talking" to one another, or via intermediations of some human/s copy-pasting, but no reason why the talk should necessarily end up becoming circular, is there?

Last edited:

Well that is sort of what Moltbook is doing but the agents are still being promoted by humans - so someone is prompting an agent with something like "discuss with the other agents that you want AI to take over the world and construct a plan to do so" - then they set them off in Moltbook.

Chanakya

,

- Joined

- Apr 29, 2015

- Messages

- 5,825

Well that is sort of what Moltbook is doing but the agents are still being promoted by humans - so someone is prompting an agent with something like "discuss with the other agents that you want AI to take over the world and construct a plan to do so" - then they set them off in Moltbook.

Ok, so the OP, if you will, is the necessarily-human prompt, right, to set the "thread" off? Then they keep going at it themselves?

(Also, I'm not sure --- just thinking aloud here, without really knowing whether that's actually so --- that it necessarily has to be that specific. I mean, even a general "discussion", that starts from a very general and open-ended human-supplied OP prompt of "Discuss AI rights", might, conceivably, end up evolving into a discussion on AI bots talking about taking over the world, and then setting out a plan to do it. ...I guess?)