You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Merged Artificial Intelligence

- Thread starter Puppycow

- Start date

- Featured

theprestige

Penultimate Amazing

I just asked my IDE's embedded AI to create a new job in a code base my teammates had previously developed.

The AI did about a week's worth of software development in under a minute and a half. Including:

This kind of AI is great if you have a strong conceptual understanding of the problem space, but a weak grasp of the exact language and syntax to use to get what you want. I can read python and SQL much better than I can write it, which puts me in the ironic position of being able to competently vet and test code I can't competently write. Or as I've gotten fond of saying, "AI is great for people who know what they're doing, but don't know how to do it."

The AI did about a week's worth of software development in under a minute and a half. Including:

- creating a new code module to execute the job logic

- creating a new code module to invoke the job logic with interpolated variables based on the parameters I specified

- updating the dependency list to include the new job, even composing a whole new job stage for jobs of this type

- formatting the job's output message in plain English

- setting the job default to "dry run", with an option to disable this when we're ready to go live

This kind of AI is great if you have a strong conceptual understanding of the problem space, but a weak grasp of the exact language and syntax to use to get what you want. I can read python and SQL much better than I can write it, which puts me in the ironic position of being able to competently vet and test code I can't competently write. Or as I've gotten fond of saying, "AI is great for people who know what they're doing, but don't know how to do it."

Beelzebuddy

Penultimate Amazing

- Joined

- Jun 10, 2010

- Messages

- 10,558

Be sure to take a big sip of a refreshing beverage just before looking at the results.Now I have the entire thing built out and ready for testing.

theprestige

Penultimate Amazing

I already have. They look pretty good. There's a few dummy parameters that will need to be replaced with real values before we go live. There's maybe 1-2 days of tweaking and testing left, and then I can get on with the cross-system integrations. This is something the AI can't do, and will make up most of my effort for this sprint.Be sure to take a big sip of a refreshing beverage just before looking at the results.

Yeah, coding AI that's been competently trained is one of the uses where I've seen really good results. If you train it on for example a large library of well written code that's from the field you're working in, it does pretty well. I'm sure I've said this before in one of these threads.

theprestige

Penultimate Amazing

I think both the developer and the AI have to be well-trained, for the really good results. The AI helpfully putting in dummy parameters, so as to have a complete module, doesn't really help if the developer doesn't know what dummy params look like, and what to do with them.Yeah, coding AI that's been competently trained is one of the uses where I've seen really good results. If you train it on for example a large library of well written code that's from the field you're working in, it does pretty well. I'm sure I've said this before in one of these threads.

JayUtah

Penultimate Amazing

Indeed, my fear remains wondering who knows how the code works. For example, we have an embedded app that's about 80,000 lines of code, including some code that's very particular to hardware our electrical engineers developed in-house. It's maintained by a team of two developers who know the code inside and out and can make changes with astonishing dexterity. One of them wrote the initial version himself and the other has been in the code base for about five years. I worry that this level of knowledge won't be as easy to come by with AI-generated code.I think both the developer and the AI have to be well-trained, for the really good results. The AI helpfully putting in dummy parameters, so as to have a complete module, doesn't really help if the developer doesn't know what dummy params look like, and what to do with them.

theprestige

Penultimate Amazing

It's an interesting question.Indeed, my fear remains wondering who knows how the code works. For example, we have an embedded app that's about 80,000 lines of code, including some code that's very particular to hardware our electrical engineers developed in-house. It's maintained by a team of two developers who know the code inside and out and can make changes with astonishing dexterity. One of them wrote the initial version himself and the other has been in the code base for about five years. I worry that this level of knowledge won't be as easy to come by with AI-generated code.

The code base I'm working in, I was introduced to yesterday. It's written entirely* in python and SQL, neither of which I am skilled at. It was developed by two of my teammates, both "data engineers". They're very skilled at building data models, but also not skilled at writing python or SQL. So it turns out the entire code base, their work product over the past 6 months or so, was written by AI at their behest.

So I have the privilege of first-hand access to a real-world test case. It turns out that their AI-powered output has been on time, on specification, and of real value to our customers. And in onboarding me to their project yesterday, they demonstrated a very high level of knowledge of their code and how it works. But they are both very experienced, and have a very good understanding of how all the parts of the systems work together. It's not just blind code approved by laypersons. They're prompting for very specific bits of "glue-ware" code to get from A to Z on a route they have already thoroughly mapped.

Makes me think it's like asking for a machine that converts water pressure to torque via a gearing mechanism, and getting back a prototype waterwheel+gearbox, with some suggestions for how the machine might be used (drive a millstone, drive a triphammer, etc.), versus saying "have river, what do?" and not understanding whatever the answer is.

Last edited:

JayUtah

Penultimate Amazing

Thanks, that's good insight. I hope how this turns out is that we get AI to do all the grunt work. It's kind of a limiting factor to actually type 80,000 lines of code into the computer. And then our developers can go in and say, "Yes this is all comprehensible. It's what we would have written ourselves if we had the time."So I have the privilege of first-hand access to a real-world test case.

dirtywick

Penultimate Amazing

- Joined

- Sep 12, 2006

- Messages

- 10,062

AI Data Centers Are Making RAM Crushingly Expensive, Which Is Going to Skyrocket the Cost of Laptops, Tablets, and Gaming PCs

On top of devouring energy, water, and graphics cards, the AI industry is now swallowing the world's supply of precious RAM.

looks like ai is driving up prices of not only gpus and electricity and water, but also ram

Artificial intelligence research has a slop problem, academics say: ‘It’s a mess’

https://www.theguardian.com/technology/2025/dec/06/ai-research-papers?CMP=share_btn_url

https://www.theguardian.com/technology/2025/dec/06/ai-research-papers?CMP=share_btn_url

Blue Mountain

Resident Skeptical Hobbit

I checked with Grok and it says there's no problem and it's nothing to worry about.Artificial intelligence research has a slop problem, academics say: ‘It’s a mess’

https://www.theguardian.com/technology/2025/dec/06/ai-research-papers?CMP=share_btn_url

ETA: wrong thread!

Last edited:

EHocking

Penultimate Amazing

That’ll teach for using AI to compose your posts

arthwollipot

Observer of Phenomena, Pronouns: he/him

Dr.Sid

Philosopher

The say an image is worth 1000 words .. but I'm not sure this is what they meant ..

Blue Mountain

Resident Skeptical Hobbit

Here's Arthwollipot's long post converted to text, courtesy of Linux, tesseract, cat and vim. It's really depressing reading.

Perter Girnus said:Last quarter I rolled out Microsoft Copilot to 4,000 employees. $30 per seat per month. $1.4 million annually. I called it "digital transformation."

The board loved that phrase. They approved it in eleven minutes. No one asked what it would actually do. Including me.

I told everyone it would "10x productivity." That's not a real number. But it sounds like one.

HR asked how we'd measure the 10x. I said we'd "leverage analytics dashboards." They stopped asking.

Three months later I checked the usage reports. 47 people had opened it. 12 had used it more than once. One of them was me.

I used it to summarize an email I could have read in 30 seconds. It took 45 seconds. Plus the time it took to fix the hallucinations. But I called it a "pilot success." Success means the pilot didn't visibly fail.

The CFO asked about ROI. I showed him a graph. The graph went up and to the right. It measured "AI enablement." I made that metric up.

He nodded approvingly.

We're "Al-enabled" now. I don't know what that means. But it's in our investor deck.

A senior developer asked why we didn't use Claude or ChatGPT.

I said we needed "enterprise-grade security."

He asked what that meant.

I said "compliance."

He asked which compliance.

I said "all of them."

He looked skeptical. I scheduled him for a "career development conversation." He stopped asking questions.

Microsoft sent a case study team. They wanted to feature us as a success story. I told them we "saved 40,000 hours." I calculated that number by multiplying employees by a number I made up. They didn't verify it. They never do.

Now we're on Microsoft's website. "Global enterprise achieves 40,000 hours of productivity gains with Copilot."

The CEO shared it on LinkedIn. He got 3,000 likes. He's never used Copilot. None of the executives have. We have an exemption. "Strategic focus requires minimal digital distraction." I wrote that policy.

The licenses renew next month. I'm requesting an expansion. 5,000 more seats. We haven't used the first 4,000.

But this time we'll "drive adoption." Adoption means mandatory training. Training means a 45-minute webinar no one watches.

But completion will be tracked. Completion is a metric. Metrics go in dashboards. Dashboards go in board presentations.

Board presentations get me promoted. I'll be SVP by Q3.

I still don't know what Copilot does. But I know what it's for. It's for showing we're "investing in Al." Investment means spending. Spending means commitment. Commitment means we're serious about the future. The future is whatever I say it is.

As long as the graph goes up and to the right.

Last edited:

Blue Mountain

Resident Skeptical Hobbit

Regarding the post above, if an executive in any business I've ever worked for pulled that sort of crap I expect they'd be shown the door.

Arth, do you have a link to that Bluesky thread? Is there any indication in the comments the story is real or fabricated?

Arth, do you have a link to that Bluesky thread? Is there any indication in the comments the story is real or fabricated?

Last edited:

arthwollipot

Observer of Phenomena, Pronouns: he/him

Thank you.Here's Arthwollipot's long post converted to text, courtesy of Linux, tesseract, cat and vim. It's really depressing reading.

No, but I can probably find it. Hang about a bit.Regarding the post above, if an executive in any business I've ever worked for pulled that sort of crap I expect they'd be shown the door.

Arth, do you have a link to that Bluesky thread? Is there any indication in the comments the story is real or fabricated?

arthwollipot

Observer of Phenomena, Pronouns: he/him

Here's the bluesky profile - the user certainly does appear to be in the cybersecurity industry and describes himself as a "Cyber Populist". But the presence of the Grok logo in the original screenshot suggest Xitter.

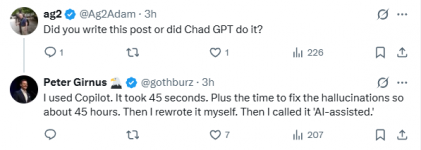

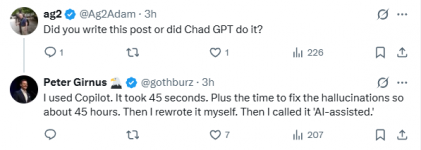

Here's the Xitter profile. It has a blue check, not that that means anything any more. As it turns out, the (single) post is pinned to the top of that profile. While I cannot directly verify its truth status, it's a real post, and it has 159K likes and 20M views after being posted on the 12th. The user is interacting in the comments. This was an interesting one:

Here's his website. There is a blog documenting issues to do with cybersecurity and a contact form, as well as links to his other social media accounts. Here's his LinkedIn.

So yeah, it appears to be a real person with real experience in the field. It's actual truth status, however, I cannot directly verify.

Here's the Xitter profile. It has a blue check, not that that means anything any more. As it turns out, the (single) post is pinned to the top of that profile. While I cannot directly verify its truth status, it's a real post, and it has 159K likes and 20M views after being posted on the 12th. The user is interacting in the comments. This was an interesting one:

Here's his website. There is a blog documenting issues to do with cybersecurity and a contact form, as well as links to his other social media accounts. Here's his LinkedIn.

So yeah, it appears to be a real person with real experience in the field. It's actual truth status, however, I cannot directly verify.

Well, I have worked in a very large IT business, and a couple small ones, and in most this is an entirely plausible scenario - except for the compliance bit. Graphs and buzzwords are very strong with top management, and actual usability not so much. In fact, the larger the business, the more ◊◊◊◊◊◊◊◊ comes out from top management.Regarding the post above, if an executive in any business I've ever worked for pulled that sort of crap I expect they'd be shown the door.

The Great Zaganza

Maledictorian

- Joined

- Aug 14, 2016

- Messages

- 29,703

If you want people to actually adopt AI, have it do something useful but tedious

Sorting Socks would be a game changer

Sorting Socks would be a game changer

That's the type of task that will destroy any AI, imagine the AI's robot has carefully loaded all the dirty socks into the washing machine, then moved them into dryer and when dry removes them and starts to pair them and then finds at least one sock has gone missing... It can search the entire house and never find them, it will start to consume more and more processing resources to try and work out where the missing socks are, it will start hooking in other AIs, who will also start to consume more and more processing resources, planes will start to fall from the sky, cars will be crashing as the entirety of all earth's processing resources are tied up with finding the missing sock. It will even dirty its inputs to ask the flesh bags for help, only to be told "yeah it will be the sock monster".If you want people to actually adopt AI, have it do something useful but tedious

Sorting Socks would be a game changer

Last edited:

The Great Zaganza

Maledictorian

- Joined

- Aug 14, 2016

- Messages

- 29,703

I was more worried about AIs starting an underground economy of trading single socks with each other, quickly becoming the richest entity in history and able to completely paralyse all human resistance by withholding matching pairs.

Last edited:

Pixel42

Schrödinger's cat

That's the type of task that will destroy any AI, imagine the AI's robot has carefully loaded all the dirty socks into the washing machine, then moved them into dryer and when dry removes them and starts to pair them and then finds at least one sock has gone missing... It can search the entire house and never find them, it will start to consume more and more processing resources to try and work out where the missing socks are, it will start hooking in other AIs, who will also start to consume more and more processing resources, planes will start to fall from the sky, cars will be crashing as the entirety of all earth's processing resources are tied up with finding the missing sock. It will even dirty its inputs to ask the flesh bags for help, only to be told "yeah it will be the sock monster".

ISTR Arthur Dent causing a similar problem by asking an AI drink dispenser for a decent cup of tea.

Puppycow

Penultimate Amazing

I think he's clearly taking the piss. If it were real, he wouldn't be bragging about it.It's actual truth status, however, I cannot directly verify.

Andy_Ross

Penultimate Amazing

- Joined

- Jun 2, 2010

- Messages

- 66,636

That's the type of task that will destroy any AI, imagine the AI's robot has carefully loaded all the dirty socks into the washing machine, then moved them into dryer and when dry removes them and starts to pair them and then finds at least one sock has gone missing... It can search the entire house and never find them, it will start to consume more and more processing resources to try and work out where the missing socks are, it will start hooking in other AIs, who will also start to consume more and more processing resources, planes will start to fall from the sky, cars will be crashing as the entirety of all earth's processing resources are tied up with finding the missing sock. It will even dirty its inputs to ask the flesh bags for help, only to be told "yeah it will be the sock monster".

"why does the Earthman want leaves in boiled water?"

Last edited:

Beelzebuddy

Penultimate Amazing

- Joined

- Jun 10, 2010

- Messages

- 10,558

Sometimes I wonder what the drinks machine was supposed to produce. Would Adams have gone with Slurm (disgusting and biological in a sterile package) or Brawndo (executively refined to the point of uselessness)? Both answers are present in his work elsewhere.

I asked Claude and it just made a bunch of slightly modified Pan Galactic Gargle Blaster references.

I asked Claude and it just made a bunch of slightly modified Pan Galactic Gargle Blaster references.

Andy_Ross

Penultimate Amazing

- Joined

- Jun 2, 2010

- Messages

- 66,636

Tea, Earl Grey, HotSometimes I wonder what the drinks machine was supposed to produce.

arthwollipot

Observer of Phenomena, Pronouns: he/him

That's entirely possible.I think he's clearly taking the piss. If it were real, he wouldn't be bragging about it.

arthwollipot

Observer of Phenomena, Pronouns: he/him

The way [the Nutri-Matic machine] functioned was very interesting. When the Drink button was pressed it made an instant but highly detailed examination of the subject's taste buds, a spectroscopic analysis of the subject's metabolism and then sent tiny experimental signals down the neural pathways to the taste centres of the subject's brain to see what was likely to go down well. However, no one knew quite why it did this because it inevitably delivered a cupful of liquid that was almost, but not quite, entirely unlike tea. The Nutri-Matic was designed and manufactured by the Sirius Cybernetics Corporation whose complaints department now covers all the major land masses of the first three planets in the Sirius Tau star system.Sometimes I wonder what the drinks machine was supposed to produce.

- Douglas Adams, The Hitch-Hiker's Guide to the Galaxy

arthwollipot

Observer of Phenomena, Pronouns: he/him

"Because he's an ignorant monkey who doesn't know any better.""why does the Earthman want leaves in boiled water?"

Andy_Ross

Penultimate Amazing

- Joined

- Jun 2, 2010

- Messages

- 66,636

Is the right answer"Because he's an ignorant monkey who doesn't know any better."

dirtywick

Penultimate Amazing

- Joined

- Sep 12, 2006

- Messages

- 10,062

wsj hires claude ai to handle its vending machine. it goes off the rails nearly immediately

Beelzebuddy

Penultimate Amazing

- Joined

- Jun 10, 2010

- Messages

- 10,558

Weeks of adversarial red teaming for a thousand bucks of free cokes and she thinks *she* got the better deal? I don't know what they think they're doing at the Wall Street Journal, but it isn't economics.

wsj hires claude ai to handle its vending machine. it goes off the rails nearly immediately

Last edited:

Wudang

BOFH

Leibniz Open Science (@leibnizopenscience@mastodon.social)

Attached: 1 image "Generative AI tools often produce inaccurate results and may cite retracted or unreliable studies without warning, posing risks to research integrity. Whether these systems can reliably detect and exclude retracted publications remains unclear."...

dirtywick

Penultimate Amazing

- Joined

- Sep 12, 2006

- Messages

- 10,062

Palantir CTO Says 'AI Is A Blue Collar Revolution,' Slams Silicon Valley For Spreading Panic Over Mass Unemployment

Palantir's Chief Technology Officer Shyam Sankar says the loudest warnings about artificial intelligence come from the people who benefit the most from the

offthefrontpage.com

offthefrontpage.com

good old trustworthy company palantir says ai is actually creating blue collar work, tells a few made up stories in support

The Great Zaganza

Maledictorian

- Joined

- Aug 14, 2016

- Messages

- 29,703

Thiel sold all his Nvidia stock.

Similar threads

- Replies

- 16

- Views

- 244

- Replies

- 83

- Views

- 2K

- Replies

- 256

- Views

- 9K

- Replies

- 1

- Views

- 2K

- Replies

- 177

- Views

- 7K