Wudang

BOFH

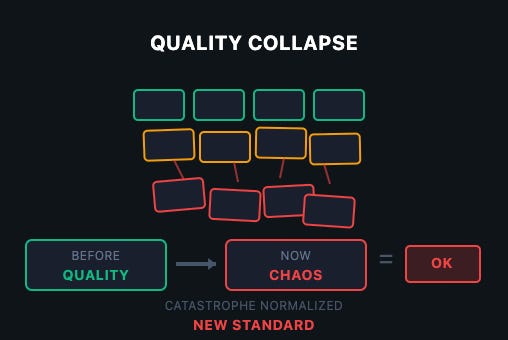

The Great Software Quality Collapse: How We Normalized Catastrophe

The Apple Calculator leaked 32GB of RAM.

I thought of posting this to the AI thread but AI is just part of the problem.

Our research found:

We've created a perfect storm: tools that amplify incompetence, used by developers who can't evaluate the output, reviewed by managers who trust the machine more than their people.

- AI-generated code contains 322% more security vulnerabilities

- 45% of all AI-generated code has exploitable flaws

- Junior developers using AI cause damage 4x faster than without it

- 70% of hiring managers trust AI output more than junior developer code

But AI wasn't the cause of the big Crowstrike fubar:

Sanitize your inputs.Total economic damage: $10 billion minimum.

The root cause? They expected 21 fields but received 20.

One. Missing. Field.

This wasn't sophisticated. This was Computer Science 101 error handling that nobody implemented. And it passed through their entire deployment pipeline.