Robin

Penultimate Amazing

- Joined

- Apr 29, 2004

- Messages

- 14,971

Those behind the PEAR project seem inordinately fond of one particular graph - it is the centrepiece of their website, it is the background image for many of the web pages and it is the background image of founder Jahn's web page:

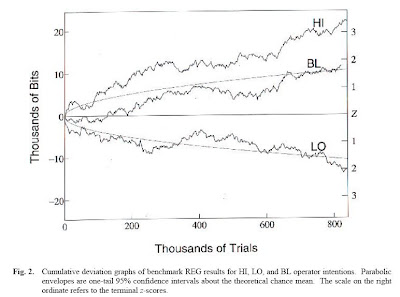

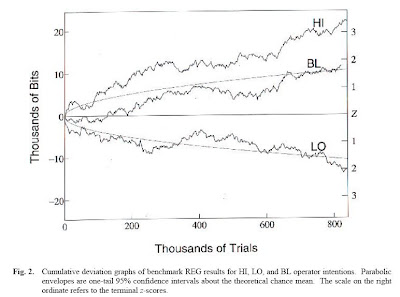

Here is it's more technical format:

This is the cumulative z score derived from about 140 hours of experimentation over 12 years (experiment described here).

So the obvious issue that springs to mind is that they have included more data from experiments that favoured their hypothesis against data from experiments which did not.

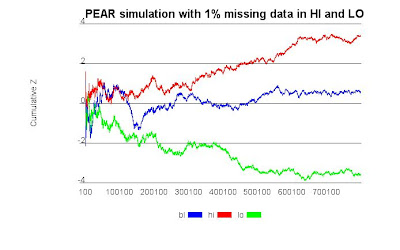

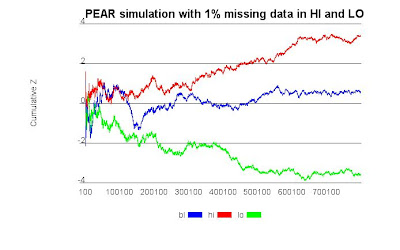

I simulated this procedure using a random number generator, but left out a few unfavourable results - representing just 1% of all sessions. Here is the result:

Which looks remarkably similar. I get the same means and standard deviations too.

You don't even have to allege misconduct, this could be consistent with experimenters feeling more motivated to commit favourable data than unfavourable, or occasionally having subjects see the results not go as expected and say things like "I wasn't ready", or "I pressed the wrong button".

Of course you can get the same result by flipping one in 10,000 bits, just as the PEAR people say is happening. But if you have the competing explanations:

1. A new and mysterious form of energy hitherto unknown to science, or;

2. Occasional lapses in lab discipline over 12 years.

Which is really more likely?

Here is it's more technical format:

This is the cumulative z score derived from about 140 hours of experimentation over 12 years (experiment described here).

So the obvious issue that springs to mind is that they have included more data from experiments that favoured their hypothesis against data from experiments which did not.

I simulated this procedure using a random number generator, but left out a few unfavourable results - representing just 1% of all sessions. Here is the result:

Which looks remarkably similar. I get the same means and standard deviations too.

You don't even have to allege misconduct, this could be consistent with experimenters feeling more motivated to commit favourable data than unfavourable, or occasionally having subjects see the results not go as expected and say things like "I wasn't ready", or "I pressed the wrong button".

Of course you can get the same result by flipping one in 10,000 bits, just as the PEAR people say is happening. But if you have the competing explanations:

1. A new and mysterious form of energy hitherto unknown to science, or;

2. Occasional lapses in lab discipline over 12 years.

Which is really more likely?